Introduction

The year 2023 witnessed significant advancements in the field of Artificial Intelligence (AI), particularly in the realm of generative AI. This article provides an in-depth survey of the key trends in AI development in 2023 and offers insights into the outlook for 2024, shedding light on the transformative effects of generative AI.

Key Trends in AI Development in 2023

Generative AI Advancements

The year 2023 marked a period of remarkable progress in generative AI, with substantial implications for industries and workforces. The State of AI in 2023 report by McKinsey highlighted the transformative effects of generative AI, emphasizing its potential to revolutionize various sectors. OpenAI's GPT-4 emerged as a groundbreaking generative AI model, revolutionizing natural language processing and creative content generation. The explosive growth of generative AI (gen AI) tools was confirmed by the latest annual McKinsey Global Survey, with one-third of survey respondents using gen AI regularly in at least one business function.

Multi-modal Learning

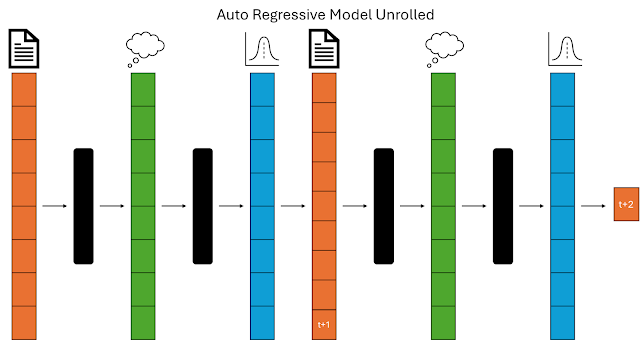

Another notable trend in AI development was the emergence of multi-modal learning, which brought forth new possibilities for AI models and their applications. This approach enabled AI systems to process and understand information from multiple modalities, leading to enhanced performance and versatility.

Economic Impact of AI

The economic impact of AI became increasingly pronounced in 2023, influencing global economies and driving discussions on the future of work and productivity. AI could contribute up to $15.7 trillion to the global economy in 2030, more than the current output of China and India combined. Of this, $6.6 trillion is likely to come from increased productivity and $9.1 trillion is likely to come from consumption-side effects.

Outlook for 2024

Monumental Leaps in AI Capabilities

The outlook for 2024 foresees monumental leaps in AI capabilities, particularly in areas demanding complex problem-solving, fueled by quantum advancements. These advancements are expected to redefine the boundaries of AI applications and unlock new frontiers in technology.

Rapid Transformations Across Industries

2024 is poised to witness rapid transformations across industries, with generative AI expected to play a pivotal role in reshaping business operations and driving regulatory discourse. This paper examines the real-world application of AI in multiple sectors, including healthcare, finance, agriculture, retail, energy, and automotive. Several case studies are described to illustrate the impact of AI on these industries. The AI Dossier highlights the most compelling use cases of AI in six major industries, providing insights into the practical applications of AI across various sectors.

Challenges and Potential Failures in Generative AI Initiatives

Despite the promising outlook, 2024 also brings forth challenges and potential failures in generative AI initiatives. Predictions suggest that the majority of such initiatives may face obstacles and encounter failures, underscoring the complexities and uncertainties associated with generative AI.

Industry Outlook for Generative AI

The industry outlook for generative AI reflects early promise, potential payoffs, and uncertainties. AI is helping organizations in the energy, resources, and industrials industry to rapidly innovate, reduce their climate impact, and increase business productivity. The impact of AI on a hospitality company has been studied, providing insights into the transformative effects of AI in the hospitality sector.

Impact of AI on Worldwide IT Spending

Projections for 2024 indicate a significant impact of AI on worldwide IT spending, with expectations of substantial growth driven by the integration of AI technologies across various sectors. The influence of AI on global IT spending is set to shape the landscape of technology investments and strategies.

Conclusion

The year 2023 marked a pivotal phase in the evolution of generative AI, setting the stage for transformative developments in the year ahead. As we look towards 2024, the landscape of AI is poised for monumental leaps, rapid transformations, and the navigation of challenges and uncertainties. The journey of generative AI continues to unfold, shaping the future of technology and innovation on a global scale.